Introduction to RDF Knowledge Engineering

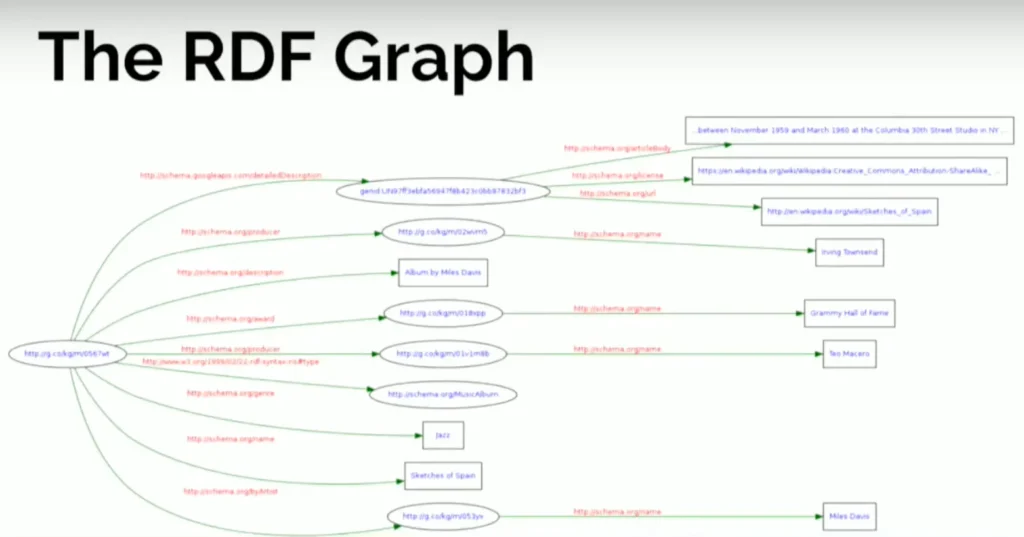

As someone who has had the pleasure to work with semantic technologies for many years, I have witnessed the Resource Description Framework (RDF) grow from a W3C standard to a foundational piece of modern knowledge management. Ultimately, RDF is a straightforward and powerful way to represent relationships between entities in subject-predicate-object “triples.”

So instead of storing unrelated data points, you’re building a web of related meaning. A well designed RDF implementation becomes the basis of complex knowledge graphs, which connect different points of information across different domains. Semantic relationships are leveraged by organizations like Google, Amazon, and Netflix to supplement their search results, recommendations, and general user experience.

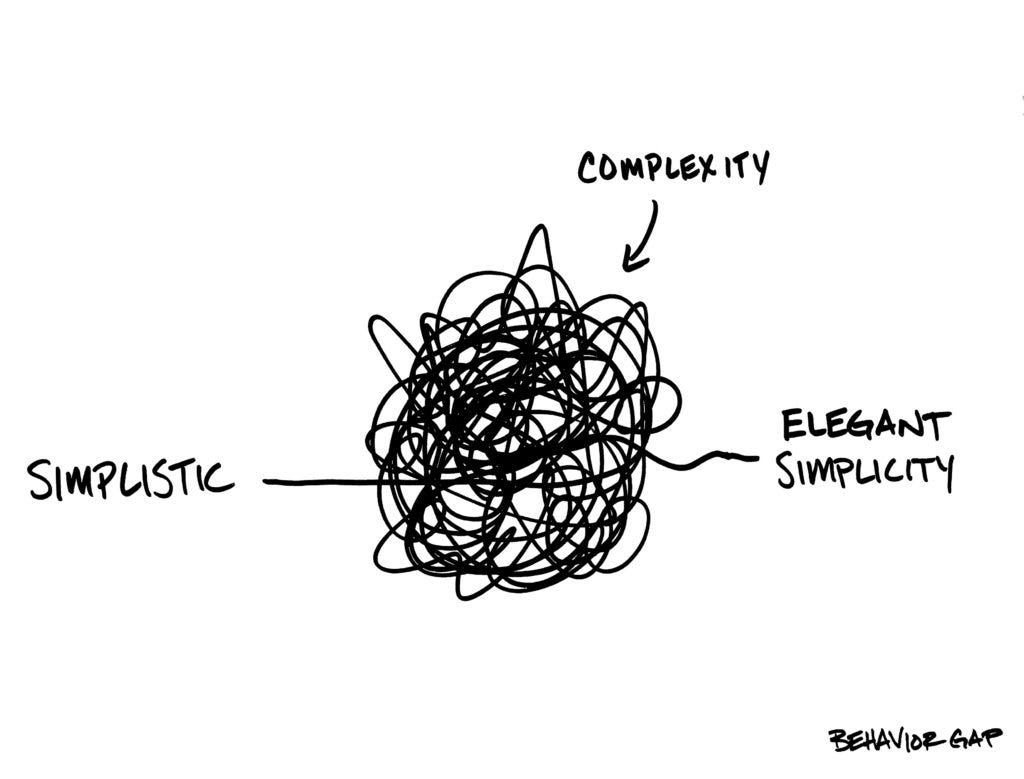

However, the road to implementing RDF knowledge bases and knowledge graphs can be long and winding. With the growth of data and business, requirements are far more complex than they were years ago. Many organizations are challenged to realize the full vision of the semantic web. In many enterprise settings, the adoption of semantic knowledge bases inevitably halts due to technical barriers, lack of resources, and the initial learning curve associated with semantic technologies.

Challenge 1: Complex Data Integration

Anyone who has tried to build a comprehensive knowledge base will know the agony of trying to combine heterogeneous data sources. I once worked with a pharmaceutical company that wanted to combine structured clinical trial data with unstructured research papers and regulatory documents.

Differences in terminology, format, and schema meant we faced difficult alignment challenges. Integration of structured data with unstructured data was frequently seen as common semantic alignment and differences between databases and the messy world of unstructured text invariably required a level of sophistication in natural language processing to extract and relate information to derive meaning.

Knoodl’s approach to resolving this situation was by using automated ontology mapping tools that could highlight possible semantic links between disparate sources. The addition of visual data transformation pipelines allowed knowledge engineers to set rules to convert varying inputs, to achieve consistent RDF triples. In actual terms, less time was spent on custom ETL (extract, transform, load) scripts and more time was spent on knowledge representation.

Challenge 2: Scalability Limitations

Anyone who has tried to build a comprehensive knowledge base will know the agony of trying to combine heterogeneous data sources. I once worked with a pharmaceutical company that wanted to combine structured clinical trial data with unstructured research papers and regulatory documents. Differences in terminology, format, and schema meant we faced difficult alignment challenges.

Integration of structured data with unstructured data was frequently seen as common semantic alignment and differences between databases and the messy world of unstructured text invariably required a level of sophistication in natural language processing to extract and relate information to derive meaning.

Knoodl’s approach to resolving this situation was by using automated ontology mapping tools that could highlight possible semantic links between disparate sources. The addition of visual data transformation pipelines allowed knowledge engineers to set rules to convert varying inputs to achieve consistent RDF triples. In actual terms, less time was spent on custom ETL (extract, transform, load) scripts, and more time was spent on knowledge representation.

Challenge 3: Collaborative Development

Building knowledge bases is rarely a solo endeavor. Teams of domain experts, ontologists, and developers must work together, often leading to workflow challenges. Version control becomes particularly thorny with ontologies, as even small changes can have cascading effects throughout the knowledge graph.

I recall a project where three different experts independently modified the same ontology, resulting in painful merge conflicts and semantic inconsistencies that took weeks to resolve. These collaborative challenges multiply in larger organizations where teams may work across different locations and time zones.

Knoodl’s collaboration features address these pain points through Git-integrated ontology management. Changes are tracked at a granular level, making it easier to understand who modified what and why. Their role-based access controls ensure that team members can only modify portions of the knowledge base relevant to their expertise, reducing the risk of unintended consequences.

Challenge 4: Query Complexity

SPARQL, the standard query language for RDF, presents a steep learning curve for newcomers. Its syntax differs significantly from SQL, requiring specialized knowledge that many developers lack. I’ve seen organizations invest heavily in RDF infrastructure only to struggle with extracting meaningful insights due to query complexity.

Performance tuning for SPARQL queries introduces additional challenges. Without proper indexing and query optimization, even simple queries can consume excessive resources and produce slow results.

Knoodl simplifies this aspect through a visual query builder that allows users to construct SPARQL queries using intuitive drag-and-drop interfaces. Behind the scenes, their query optimization analytics automatically suggest improvements and identify potential bottlenecks. This democratizes access to the knowledge base, allowing business users to extract insights without becoming SPARQL experts.

Challenge 5: Maintenance Overhead

One of the most underestimated challenges is the continual upkeep of RDF knowledge bases. Business needs change, schemas drift, and dependencies grow. RDF knowledge bases will rapidly become stale, incomplete, or internally inconsistent without appropriate governance.

Complexity increases dramatically with dependencies, especially when your ontologies reference external sources that may change without notice. I have I felt the frustration of realizing that an essential external vocabulary was changed, losing hundreds of pre-existing relationships with entities in our knowledge base. Knoodl has some great maintenance tools that show real promise in this area.

Their impact analysis automatically looks for dependencies and identifies potential ripple effects before you implement changes. Their change propagation visualizer allows your team to understand the full impact of ontology changes and reduce the likelihood of unintentional effects.

Future Direction

The barriers to creating RDF knowledge bases are substantial, but technologies such as Knoodl are already making semantic technologies much more accessible and useful in Enterprise contexts. Several organizations using Knoodl reported eye-popping time freedom in knowledge integration time (42% average time saved), as well as query performance (average 3x faster on complex graph traversals).

Very shortly, Knoodl is looking to deploy machine learning functions to automate owl:equivalentTo ontology alignment, and develop more natural language front ends for knowledge exploration. With the growth of industry adoption of semantic technologies, it will be vital to utilize the tools that make the enterprise semantic technology industry simpler to adopt and realize real revolutionary value.

It’s not just seeing more data – it’s seeing more meaning of RDF knowledge bases in the future. And that’s a solvable challenge.